- David Sciaraffa, Software Development Manager – IBM Db2 Availability

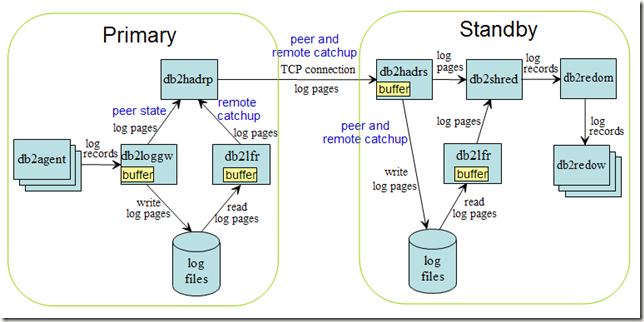

The Db2 High Availability Disaster Recovery feature, better known as HADR, is a database replication solution for disaster recovery. There are few database administrators in the world unfamiliar with HADR, being one of the most successful and widely deployed Db2 features.

While application changes are not required to deploy HADR, and up-and-running is quick and simple, database administrators should accommodate the extra monitoring required to assure the health of the standby database. The IBM Developerworks HADR Wiki provides excellent insight into the nuances of monitoring HADR databases, covering areas of availability and performance. However, while an HADR standby database may be online and available, one or more tablespaces may be offline and unavailable. This condition has caught many database administrators by surprise after a TAKEOVER HADR operation.

An HADR standby database is in continuous replication with it's primary database by replaying transaction log data. If an error occurs during the replay of this log data on the standby database, such as a filesystem full condition or error, a tablespace on the Standby database will be put into an error state and log replay will stop for this tablespace. The Primary database will be unaware of this condition on the standby database, and applications accessing the primary database will continue on as business as usual. Because of this assumed health of the standby database, a subsequent Takeover operation results in the unexpected unavailability of tablespace data when this standby database becomes the primary database.

Monitoring for tablespaces in erroneous states on the Standby database was a little cumbersome prior to Db2 Version 10.5 fixpack9 or v11.1.1.1, as it requiring examining "db2pd -tablespaces" output on the standby database system and checking for an abnormal tablespace state value. Starting in Version 10.5 fixpack 9 or v11.1.1.1, monitoring this condition became much easier, as a new flag 'STANDBY_TABLESPACE_ERROR' was added to the HADR_FLAGS field of db2pd -hadr (or the MON_GET_HADR() table function) directly on the Primary database.

For more details about monitoring this error condition, please see new technote Monitoring and identifying tablespaces in invalid or error state, or tables in Inoperative state on the HADR Standby database.

Now that we've discussed the importance of monitoring for this condition, you're probably wondering how to best resolve the issue.

Prior to Version 10.5 fixpack9 or v11.1.0.0, resolving the issue required a full re-initialization of the standby database (ie. a full database backup image of the primary database must be restored on the standby database and hadr restarted). For large databases this could take a considerable amount of time, and leave the primary database exposed without a disaster recovery counterpart.

Starting in Version 10.5 fixpack 9 (non-pureScale only) or v11.1.0.0, resolving the issue requires only the re-initialization of the erroneous tablespace(s). For example, a tablespace backup image on the primary database can be restored on the standby database and hadr reactivated. A full database backup restore is not required. For large databases this can help to drastically reduce the time required to reestablish HADR disaster resilience.

For more details on how to recover from this condition using a tablespace backup image, please see technote How to recover from tablespace errors on an HADR Standby database.

I hope this information is useful. If you have any thoughts or questions, feel free to submit a comment below.