- David Sciaraffa, Software Development Manager – IBM Db2

In systems configured for disaster resilience through the High Availability Disaster Recovery (HADR) feature, a Primary database is coupled with one or more Standby databases. The Primary database will send transaction log data across the network to the Standby database(s), where this log data is replayed, allowing the Standby database(s) to stay synchronized with any changes that are made on the Primary database. HADR is one the most popular features of Db2, because of it's simplicity of setup, and requiring no application changes.

In order to achieve balance between data protection and performance, HADR supports several levels of synchronization.

HADR synchronization/protection levels:

SYNC: provides the highest level of protection. A transaction commit operation on the Primary database only returns back to the application if it’s transaction log data has been successfully written to disk on the Standby database. [This level usually has a noticeable performance impact versus other protection levels].

NEARSYNC: provides the next best level of protection. A transaction commit operation on the Primary database only returns back to the application if it’s transaction log data has been successfully received in memory on the Standby database.

ASYNC: A transaction commit operation on the Primary database only returns back to the application if it’s transaction log data has been successfully placed on the Primary database side tcp port to the Standby.

SUPERASYNC: A transaction commit operation on the Primary database always returns back to the application regardless of the status of the transmission of log data to the Standby database.

Sync modes are well documented in the IBM Developerworks DB2 HADR Wiki.

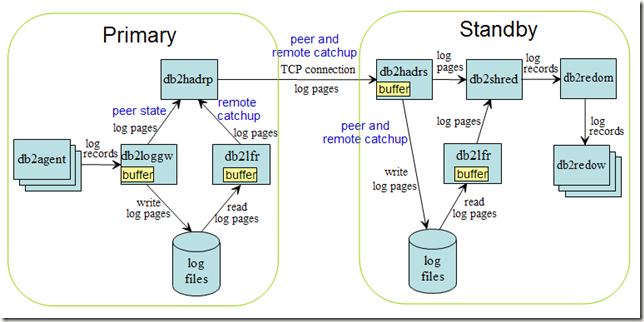

HADR log data process flow:

The underlying protocol and process flow for communication of transaction log data within an HADR system utilizes parallel processes in order to achieve high performance, and is thus not trivial.

The diagram below (borrowed from the IBM Developerworks DB2 HADR Wiki) shows how a db2agent process (executing an application's sql operation) generates a transaction log record within an in-memory buffer of transaction log pages, one or more transaction log pages are asynchronously written to a transaction log file on the Primary database server by the db2loggw daemon, and in parallel these transaction log pages are sent over the network via tcp port by the db2hadrp daemon. The data is then received on the Standby database via the tcp port by the db2hadrs daemon, and then asynchronously written to a transaction log file on the Standby database by the db2loggw daemon, and in parallel sent to a db2shred daemon to parse and facilitate replay via other daemons on the Standby database.

Identifying network corruption errors:

The transmission of transaction log data across the network from the Primary to Standby database represents a potential point of failure. When networks are unstable or unreliable, this can manifest as the corruption of transaction log data or meta data during transmission.

The Standby database will typically fail with a "Log page checksum mismatch. pageLso xxxxxx, CheckSum xxxxxx" db2diag.log error message in Version 10.5 Fixpack 8 or earlier fixpacks; or "Bad page detected. The bytecount is incorrect" and "Bad page detected. Checksum mismatch…" errors in newer fix packs.

Network corruption errors and Crash Recovery failures on Standby database:

Some integrity checking of transaction log pages on the Standby database is always performed when the log pages are parsed by the db2shred daemon. While this does provide notification of integrity failure, it is possible that corrupted transaction log data can be written into the transaction log file on the Standby database before the integrity check failure is detected by the db2shred daemon. When the Standby database fails due to the transaction log page integrity check failure it will generate db2diag.log errors (as described above) and terminate. A subsequent restart of the Standby database (and implicit crash-recovery) will fail due to the same condition. To recover from this condition, typically a database administrator would need to either copy valid transaction log files from the Primary database, or reinitialize the Standby database.

Adding resilience to network corruption errors:

Starting in Version 10.5 Fixpack 9 and Version 11.1.3.3, more aggressive integrity checking can be enabled on the Standby database, along with automatic retry logic.

By enabling the DB2_ENABLE_HADR_LOG_PAGE_INTEGRITY_CHECK registry variable, integrity checking of transaction log data is performed by the Standby database db2hadrs daemon upon receiving log pages from tcp port, before they are written to log files by the db2loggw daemon or parsed by the db2shred daemon. When an integrity check failure is detected, an error messages is printed into the db2diag.log (as described above), and the db2hadrs daemon will automatically attempt to have these log pages retransmitted from the Primary database. This happens seamlessly from the application or administrator.

For more details on this new feature, please see:

I hope this information is useful. If you have any thoughts or questions, feel free to submit a comment below.

No comments:

Post a Comment